The Google File System (GFS) paper, published in 2003, marked a paradigm shift in distributed storage system design, proving that high-performance, scalable, and reliable systems could be built with cost-effective commodity computers, solely through software innovations.

The Genesis of GFS and Its Lasting Impact

GFS drastically lowered the barriers to entry for distributed file systems, inspiring subsequent systems like Hadoop Distributed File System (HDFS) developed by Yahoo. Today, HDFS is a cornerstone in the big data ecosystem, a testament to GFS’s enduring design principles.

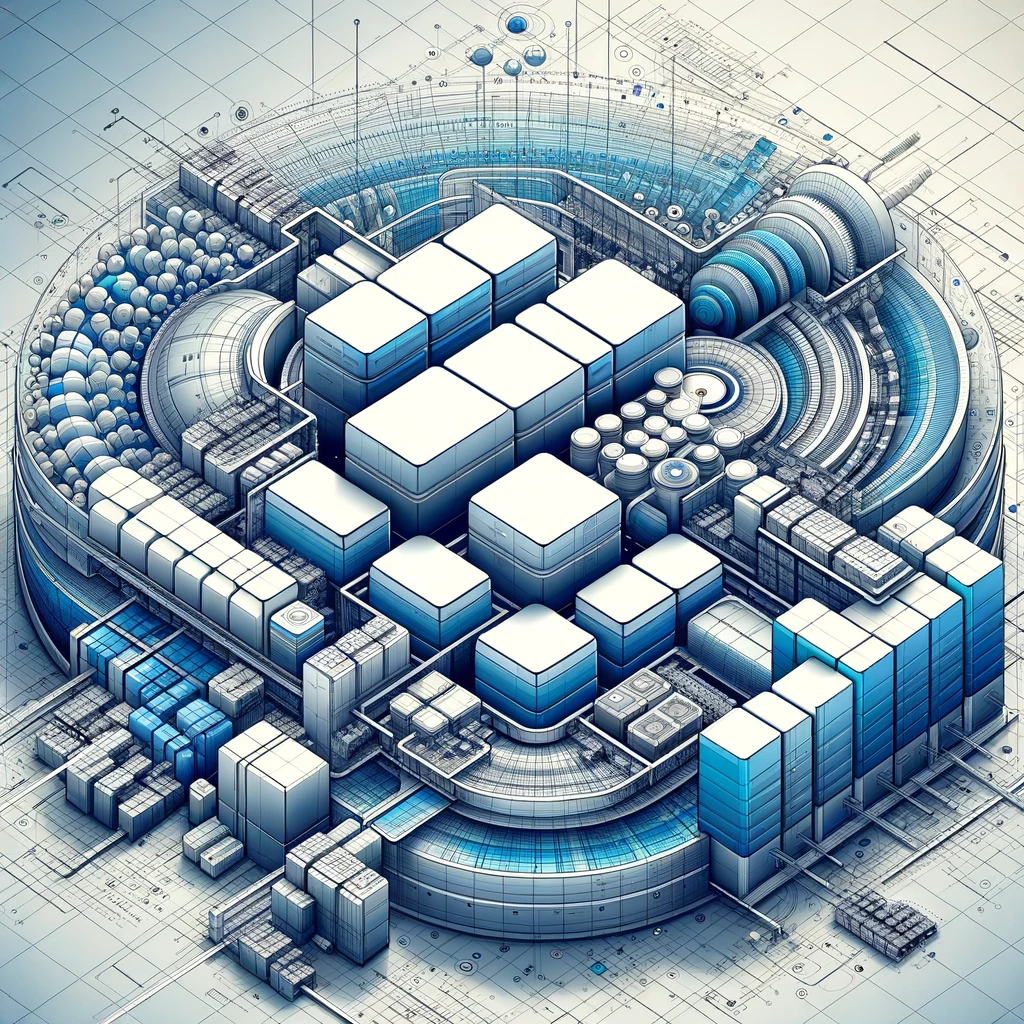

GFS Architecture: A Masterful Design

The GFS cluster is composed of:

- A Master Node: Acts as the metadata server, maintaining vital file system data like directories, permissions, and attributes within a hierarchical structure.

- Chunkservers: Serve as the workhorses, storing the actual data and relying on the local file system for data management.

- Clients: Interface with the GFS, requesting and receiving data.

Interconnecting Components for Distributed Efficiency

In GFS, the master and chunkservers communicate over a network to create a distributed file system capable of scaling horizontally as data volume increases. Clients interact with the master to fetch metadata before engaging with chunkservers to retrieve the actual data.

Robust Data Storage with Fixed-Size Chunks

GFS stores data in fixed-size chunks, typically 64 MB, and replicates them to guarantee reliability. This design, requiring interaction with various chunkservers to read a single file, is a classic and influential concept in the realm of distributed file systems.

Overcoming Scalability Challenges: The Advent of Colossus

While GFS was innovative, scalability challenges led to the creation of Colossus, an enhanced version of GFS. Colossus underpins Google’s array of products and the Google Cloud platform, offering heightened scalability and availability to meet the exponential data demands of modern applications.

In the end, the legacy of GFS is evident not only in its direct descendants but also in the foundational concepts it introduced, which continue to shape the future of distributed storage systems.